Benchmarks

The performance analytics of Colossal-AI demonstrate that our software is the fastest and most cost efficient solution for your deep learning infrastructure needs.

Top performance results in a nutshell

10x

faster training time

50%

14x

11x

24x

50%

50%

45%

1 GPU

Best performance in its class

Colossal-AI delivers the best performance and provides significant cost savings compared to default framework configurations and to its competitors.

|

|

||

| PyTorch is a machine learning framework for Python. | 120x larger model sizes |

|

| Microsoft DeepSpeed is a deep learning optimization library. | 3x higher throughput |

|

| NVIDIA NeMo Megatron is a framework to build and deploy LLMs. | 5x faster training |

|

Training benchmarks

Colossal-AI supports you in every machine learning model training process in which a machine learning algorithm is fed with sufficient training data to learn from.

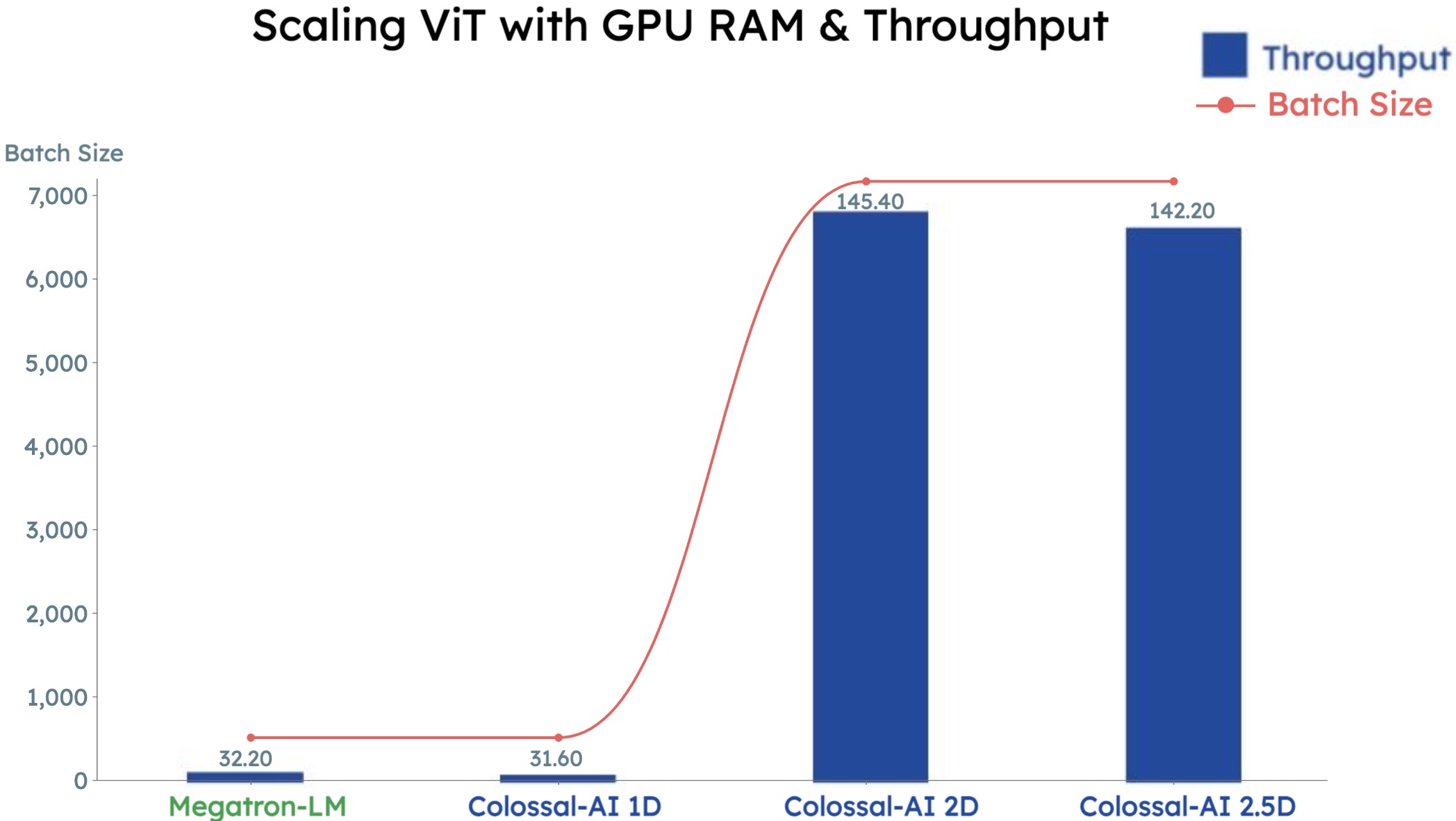

ViT model

ViT model

The Vision Transformer, or ViT, is a model for image classification that employs a Transformer-like architecture over patches of the image. ViTs are being adopted in a wide range of computer vision tasks, from image classification to object detection and segmentation.

Colossal-AI vs. Megatron

-

Achieve 14x larger batch sizes with Colossal-AI

-

and 5x faster training for ViT

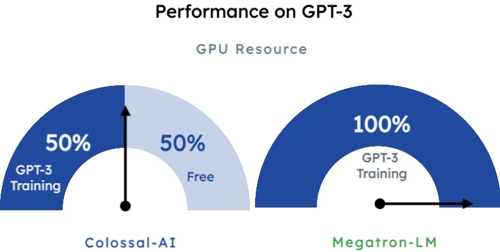

GPT-3 model

GPT-3 model

Using text on the internet, GPT-3 is trained to generate realistic human text. GPT-3 has been used to create articles, poetry, stories, news reports and dialogue using just a small amount of input text that can be used to produce large amounts of quality copy.

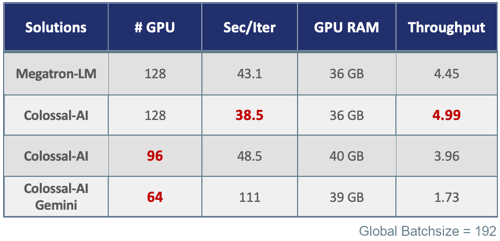

Colossal-AI vs. Megatron

-

You can save 50% of your GPU resources with Colossal-AI

-

and achieve a 10.7% acceleration

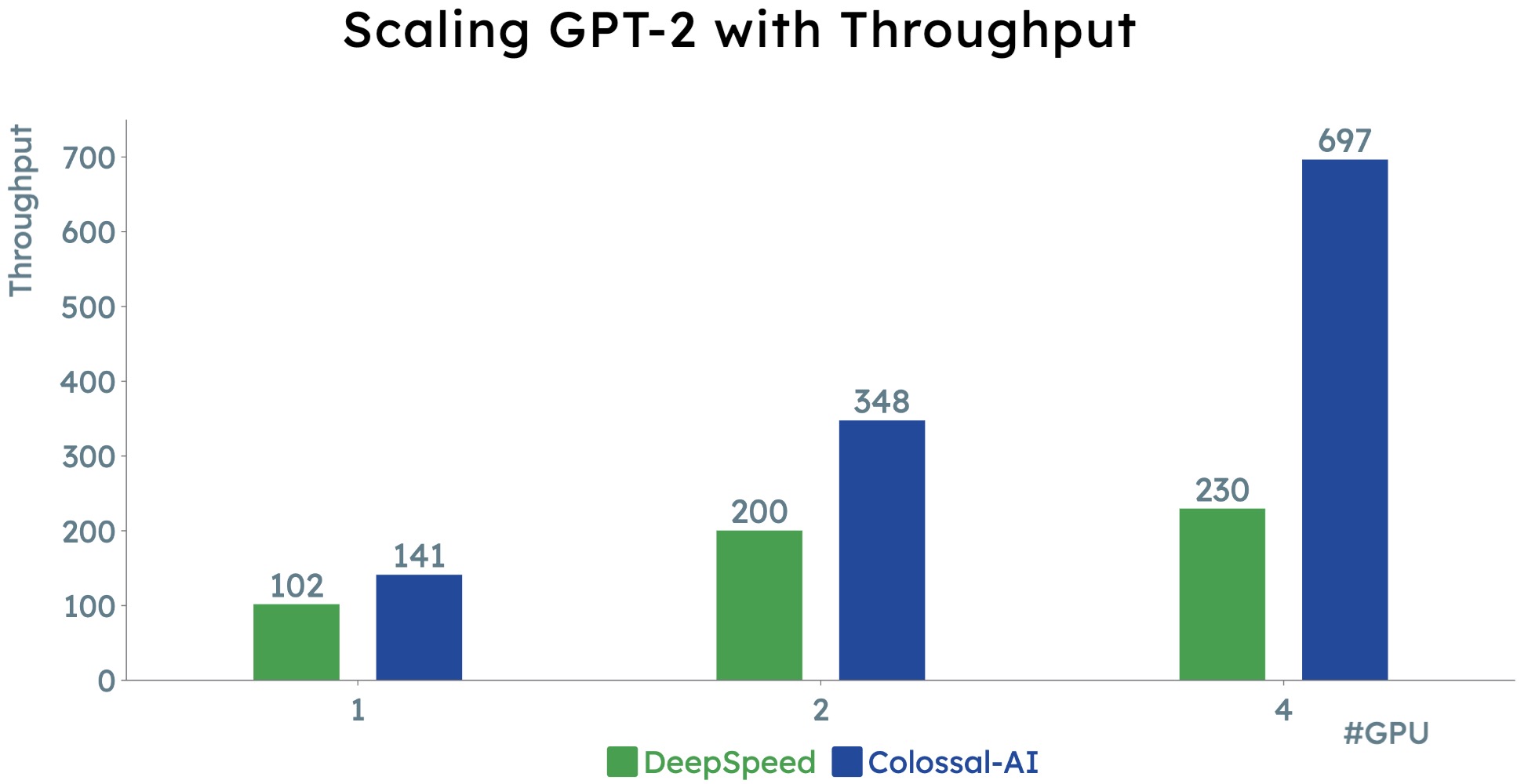

GPT-2 model

GPT-2 model

GPT-2 is an unsupervised deep learning transformer-based language model created by OpenAI back in February 2019 for the single purpose of predicting the next word(s) in a sentence. GPT-2 is an acronym for “Generative Pretrained Transformer 2”.

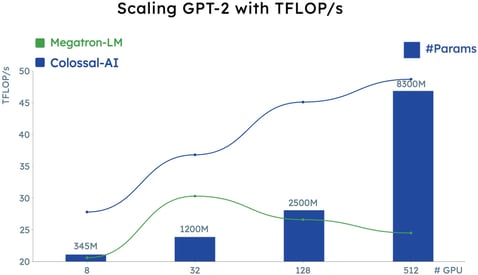

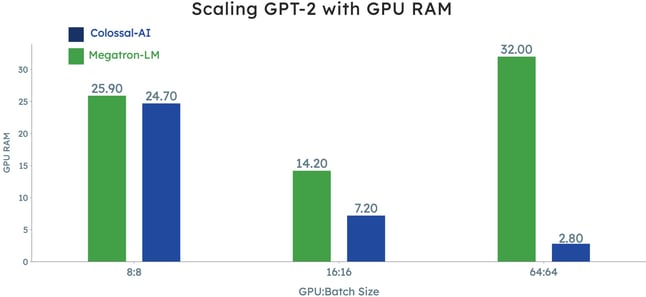

Colossal-AI vs. Megatron

-

You benefit from a 11x lower GPU memory consumption with Colossal-AI

-

and superlinear scaling efficiency due to its tensor parallelism

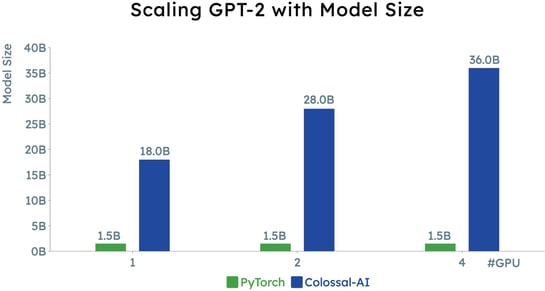

Colossal-AI vs. PyTorch

-

Scale to a 24x larger model size on the same hardware

Colossal-AI vs. DeepSpeed

-

Benefit from a 3x speedup on the same computing devices

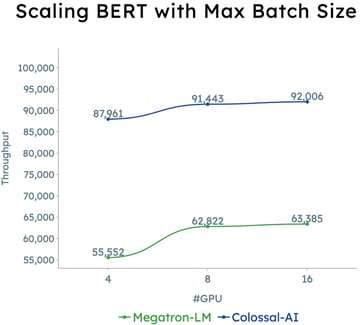

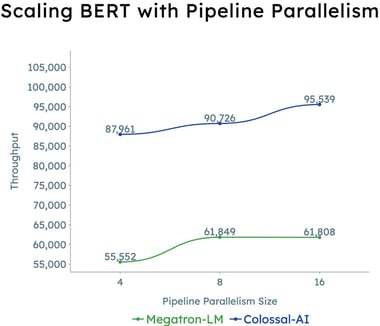

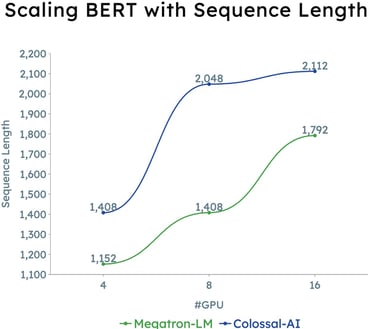

BERT model

BERT model

BERT is an open source machine learning framework for natural language processing (NLP). BERT is designed to help computers understand the meaning of ambiguous language in text by using surrounding text to establish context.

Colossal-AI vs. Megatron

-

Colossal-AI propels you to a 2x faster training

-

or enables you to run a 50% longer sequence length

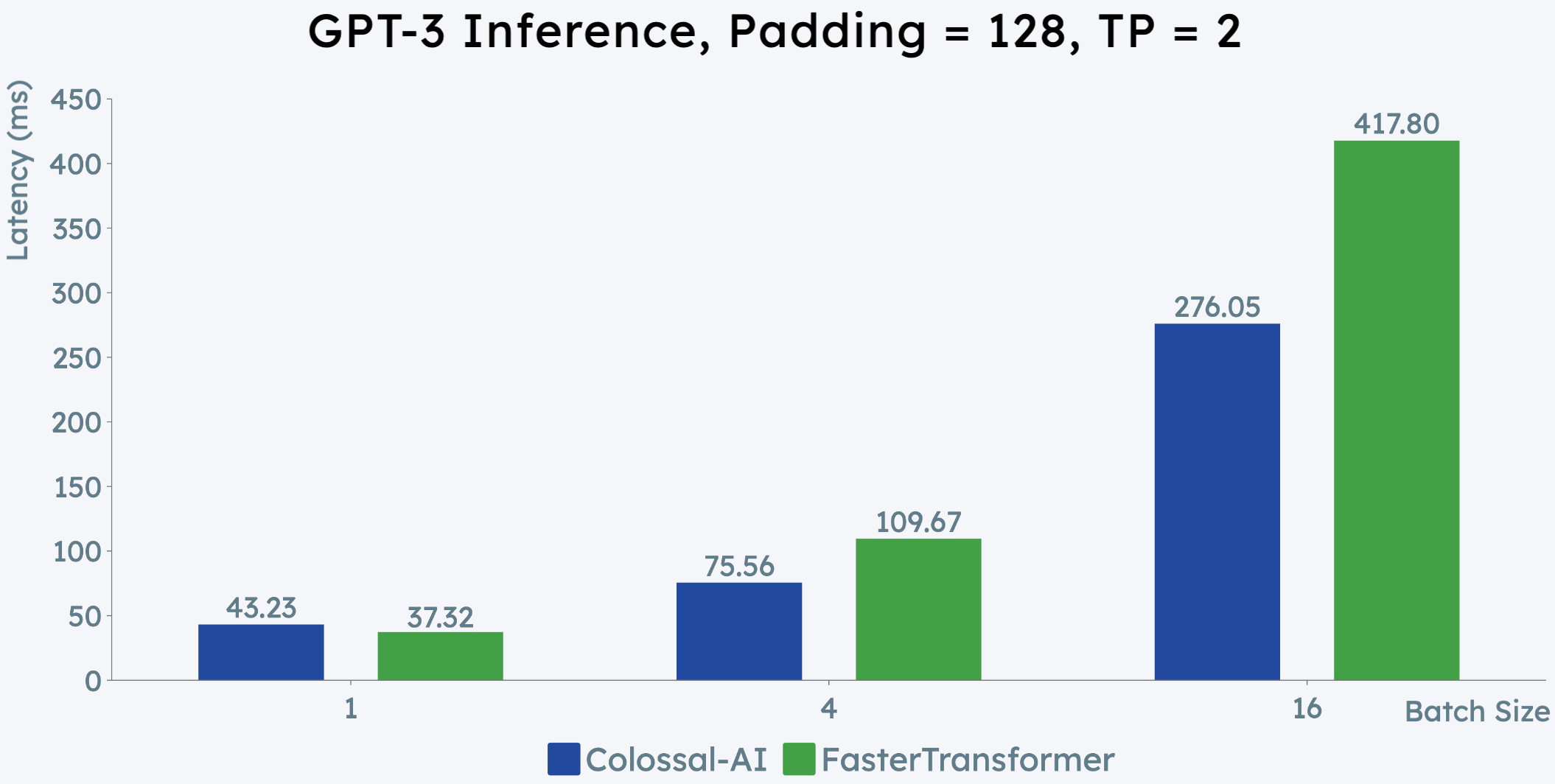

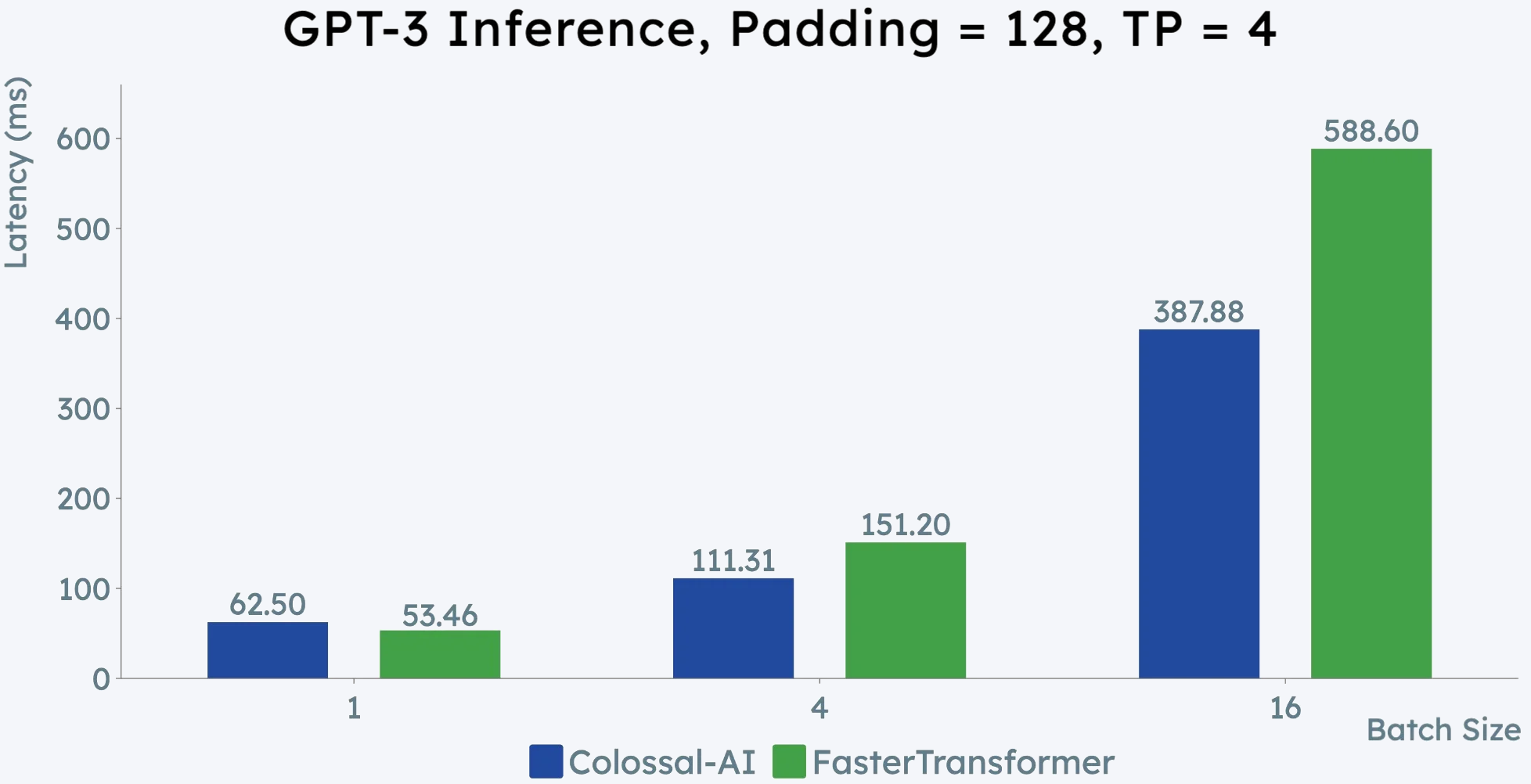

Inference benchmarks

Benefit from Colossal-AI during model inference by processing unseen data using the trained model at higher speed and larger scale to produce accurate results. Colossal-AI provides inference optimization functionality through the Energon-AI extension.

GPT-3 model

GPT-3 model

Colossal-AI vs. NVIDIA FastTransformer

-

Colossal-AI enables you to achieve 50% inference acceleration on the same hardware infrastructure

Fine-tuning benchmarks

Take a model that has already been trained for one given task and tune or tweak the model faster with Colossal-AI to make it perform a second similar task.

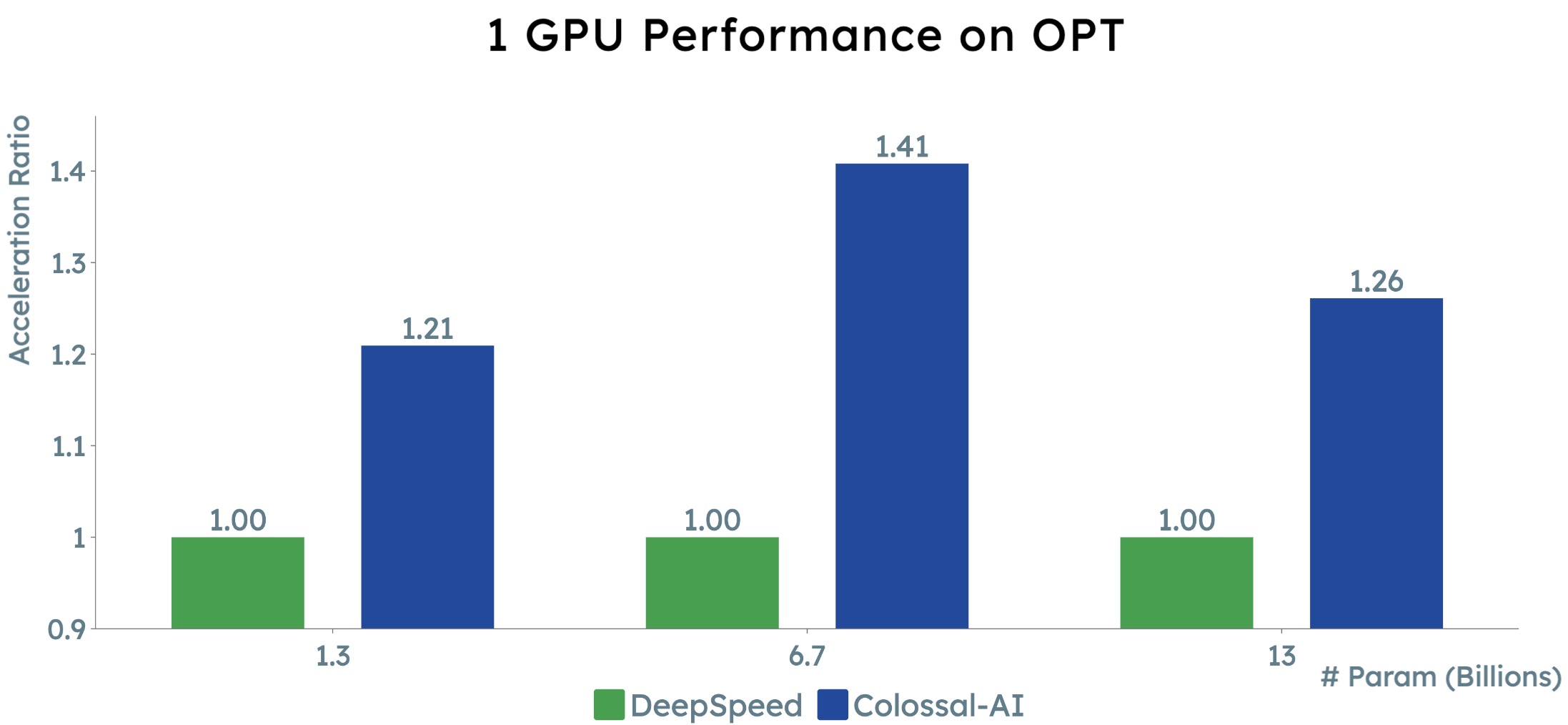

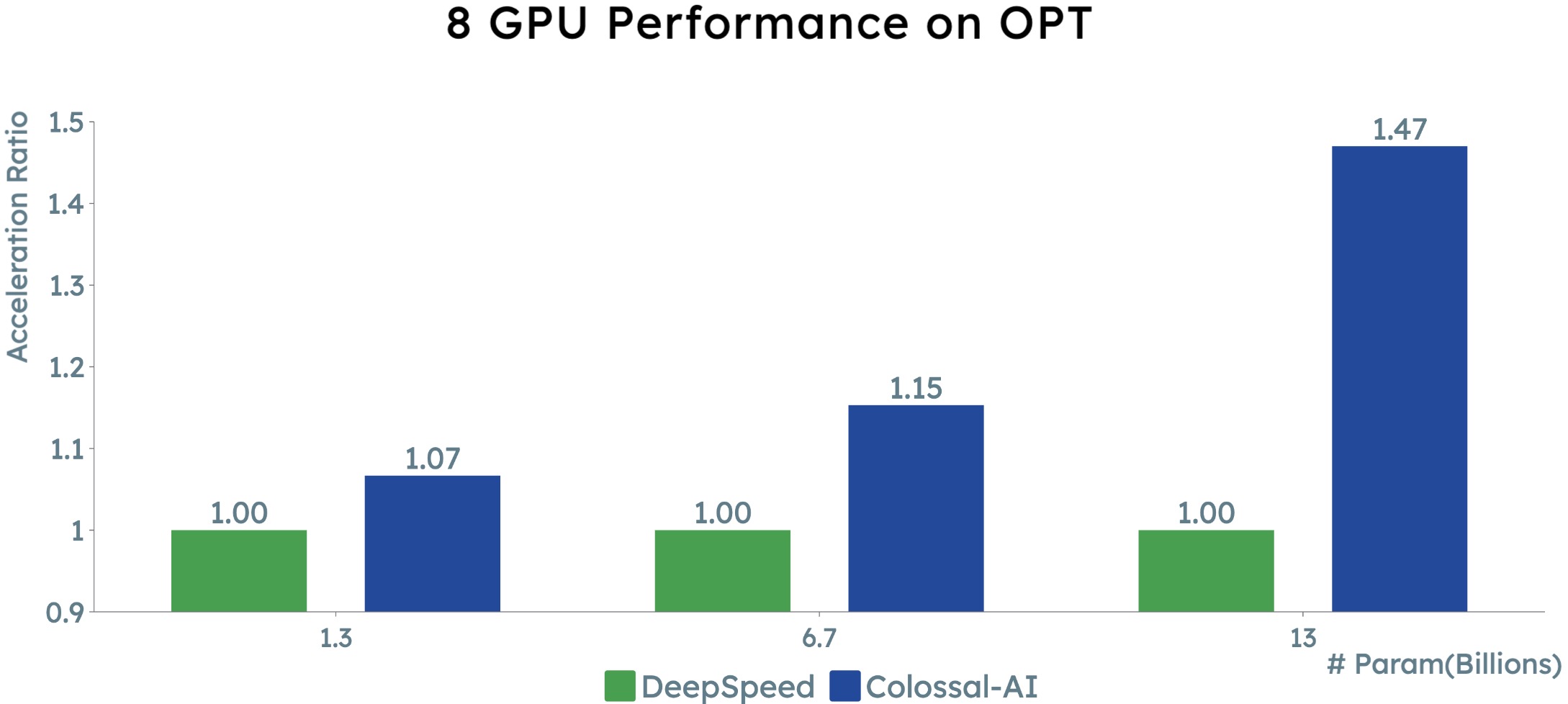

OPT model

OPT model

Meta AI has introduced a large language model trained on billions of parameters called OPT (Open Pre-trained Transformers). It can be used to generate creative text, solve simple math problems, answer reading comprehension questions.

Colossal-AI vs. DeepSpeed

-

With Colossal-AI, a 45% speedup fine-tuning OPT is possible

-

at low cost in lines