The development cost of video generation models has saved by 50%! Open-source solutions are now available with H200 GPU vouchers

5 minute read

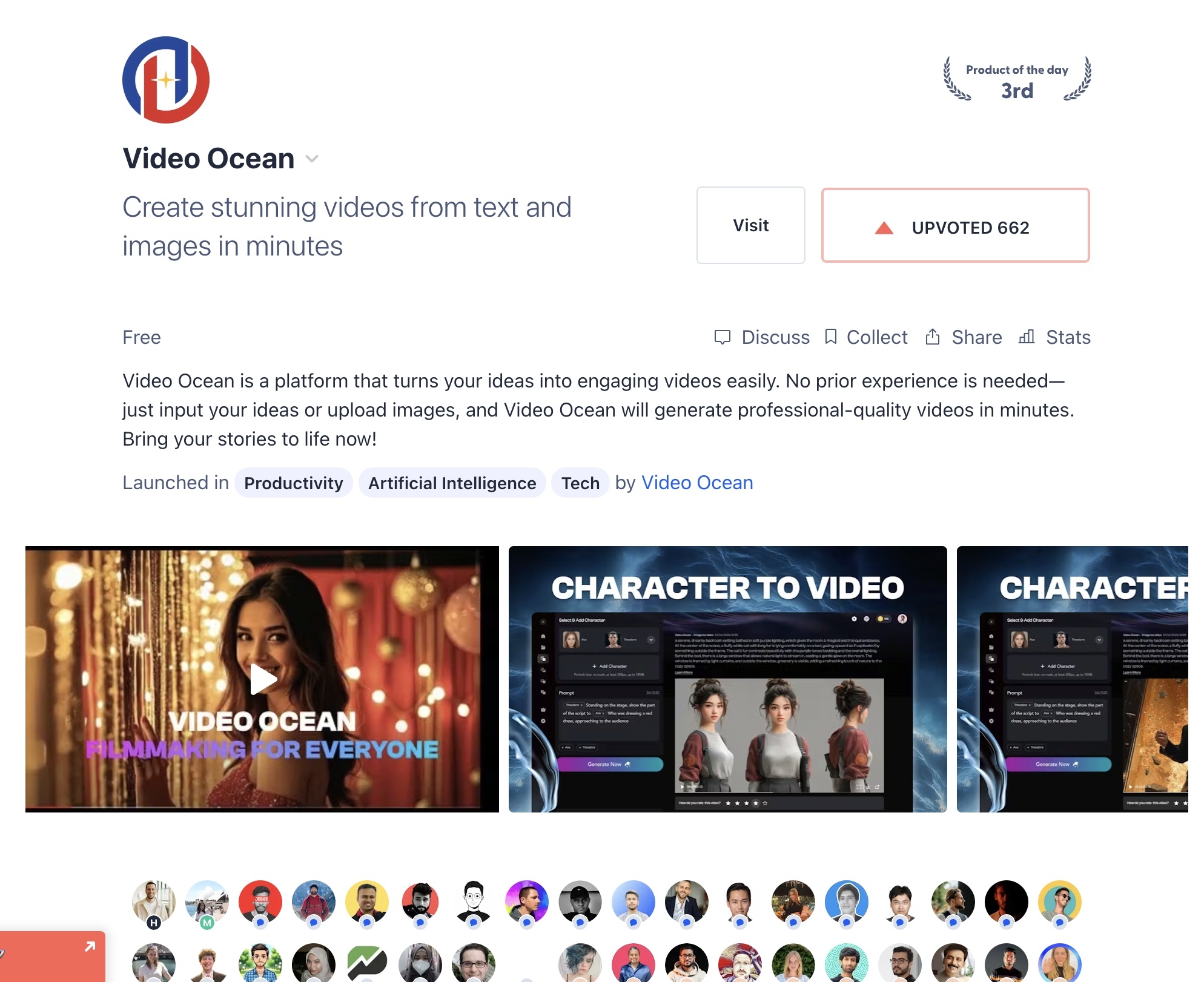

The recently launched free video generation platform, Video Ocean, has garnered widespread attention and praise. It supports creating videos in any style with any character, offering features like text-to-video, image-to-video, and character-based video generation. It has even climbed to the third spot on Product Hunt's global product popularity leaderboard.

Experience it here: https://video-ocean.com/en How does Video Ocean achieve rapid iteration at an extremely low cost? An open-source solution is now available.

By contributing secondary development to the open-source community, you can also claim a $100 H200 GPU voucher.

Open-source repository: https://github.com/hpcaitech/Open-Sora

Colossal-AI

Colossal-AI is the foundational large AI model training and inference system behind Video Ocean. It ranks first globally in GitHub's open-source AI MLsys, boasting nearly 40,000 Stars.

Built on PyTorch, Colossal-AI employs efficient multi-dimensional parallelism and heterogeneous memory management to reduce the development and application costs of AI large-model training, fine-tuning, and inference. It has collaborated with numerous Fortune Global 500 companies to develop and optimize AI large models.

For large video model development similar to Sora, Colossal-AI has implemented various optimizations, achieving up to a 2.61-fold increase in model computational efficiency (MFU) compared to existing open-source solutions, significantly reducing costs.

Asynchronous Checkpoint

When training with large-scale clusters, the error rate increases significantly as the cluster size grows, leading to frequent interruptions in training. Rapidly saving checkpoints in such scenarios not only enhances overall training efficiency but also facilitates fault tolerance and quick recovery.

To address this, Colossal-AI has introduced an asynchronous checkpoint feature. For video generation models of 10 billion parameters, this feature reduces the time required to save DiT models, EMA models, and optimizers from over 300 seconds to under 10 seconds, saving up to 97% of the time.

The checkpoint-saving process primarily consists of two steps:

-

GPU to CPU transfer (D2H)

-

Disk write

By executing these steps in a pipeline manner, the efficiency is significantly improved. Moreover, the process runs in the background using multi-threading (C++), ensuring it does not block the main training process. The GPU-to-CPU transfer is performed on a separate CUDA stream, ensuring it does not interfere with the main computation stream.

This innovation greatly enhances the reliability and efficiency of large-scale AI model training.

.png?width=740&height=203&name=image%20(14).png)

CheckpointPipeline Checkpoint Saving

In addition, by utilizing the safetensors format, its secure and zero-copy properties further enhance reading performance.

ZeRO Memory/Communication Optimizations

.png?width=554&height=152&name=image%20(15).png)

Common ZeRO Communication Methods

.png?width=920&height=315&name=image%20(16).png)

Optimized ZeRO Communication Methods

Colossal-AI enhances the ZeRO framework by optimizing communication and memory usage, focusing on overlapping operations and reducing overhead, to achieve higher training efficiency. Here's a detailed breakdown:

1. Overlapping All-Gather with Forward Computation

-

Innovation: Parameters' All-Gather operations are overlapped with the forward computation of the next training iteration.

-

Result: Reduces idle GPU time and improves overall training throughput.

2. Bucket-Based Communication Optimization

-

Issue: Common bucket-based communication in ZeRO-DP (Data Parallelism) involves significant memory copy operations, which become costly as the cluster scales.

-

Solution:

-

Memory Copy Fusion: Combines multiple memory copy operations into a single process, reducing overhead.

-

Benefit: Decreases memory copy costs, especially in large-scale clusters.

-

3. Addressing Communication Speed Decay

(a) Bucket Size Optimization

-

Problem: Communication operators like All-Gather and Reduce-Scatter experience severe speed degradation with small bucket sizes (e.g., 25MB, the default in PyTorch DDP).

-

Solution:

-

Bucket Size Adjustment: Increase bucket size to mitigate communication slowdown.

-

Trade-Off: Overly large bucket sizes reduce the overlap between computation and communication.

-

Optimization Formula:

-

Colossal-AI uses rough searches to identify an optimal bucket size, followed by fine-tuning through practical testing.

-

(b) 2D Torus Communication

-

Problem: Communication deceleration becomes severe as cluster size increases.

-

Solution:

-

Implements 2D Torus Communication, a structured communication topology.

-

Benefit: Reduces communication latency in large-scale clusters by optimizing message routing and reducing congestion.

-

.png?width=461&height=229&name=image%20(17).png)

2D Torus Communication

After joint optimization, in video model training scenarios, scalability remains above 95% even with large cluster sizes, achieving approximately 30% acceleration in large-scale multi-node training.

Data Loading Optimization

loader = DataLoader(dataset, batch_size=2,collate_fn=collate_wrapper,pin_memory=True)PyTorch's Dataloader provides an automatic pin memory feature that significantly speeds up moving data from the CPU to the GPU, leveraging Python multithreading to implement this functionality. However, the feature has limitations, especially when dealing with high-resolution or long videos in training:

Issues with Pin Memory

-

Python GIL:

-

Python's Global Interpreter Lock (GIL) limits multithreading, meaning the pin memory implementation isn't fully parallel.

-

-

cudaMallocHost Blocking:

-

The pin memory operation calls cudaMallocHost, which can block the main process and impact the main CUDA stream.

-

For high-resolution or long videos, the memory allocation requirements are larger, making the issue more prominent.

-

-

Asynchrony:

-

Enabling pin memory may cause one process to execute slower than others, resulting in desynchronization, which significantly impacts training efficiency in large-scale cluster scenarios.

-

Colossal-AI's Solution

To address these issues, Colossal-AI enhances the dataloader with the following strategies:

-

Pre-Allocation and Caching:

-

Implements a mechanism to pre-allocate and cache pin memory, reducing runtime calls to cudaMallocHost during training.

-

Avoids memory allocation during training, ensuring smoother operation.

-

-

Cache Optimization:

-

With proper configuration, the cache hit rate can reach 100%, eliminating any negative impact on training speed.

-

Efficient memory management ensures the cache does not consume excessive RAM.

-

FP8 Mixed Precision

Colossal-AI supports the mainstream BF16 (O2) + FP8 (O1) next-generation mixed-precision training scheme. With just one line of code, it delivers an average 30% acceleration for mainstream large models, ensures training convergence, and reduces the development cost of large models.

.png?width=1280&height=847&name=image%20(18).png)

When using it, simply enable FP8 during the initialization of the plugin.

from colossalai.booster.plugin import GeminiPlugin, HybridParallelPlugin, LowLevelZeroPlugin

...

plugin = LowLevelZeroPlugin(..., use_fp8=True)

plugin = GeminiPlugin(..., use_fp8=True)

plugin = HybridParallelPlugin(..., use_fp8=True)Additionally, there is no need to introduce extra hand-written CUDA operators, avoiding lengthy AOT compilation times and complex compilation environment configurations.

Sequence Parallelism Optimization

Colossal-AI provides extensive support for multiple sequence parallelism paradigms tailored to the VideoOcean model. These include:

-

Tensor Sequence Parallelism

-

Ring Attention (Context Parallelism)

-

Sequence Parallelism (Ulysses)

These paradigms can be used individually or in combination, offering flexibility based on model and hardware requirements.

Key Optimizations

-

Communication Optimization for Ring Attention:

-

Based on the characteristics of video data (e.g., exceptionally large activations), Colossal-AI optimizes Ring Attention communication using ND-Ring to handle complex hardware configurations efficiently.

-

-

Scalability:

-

Designed for video models with tens of billions of parameters, especially when training with high-resolution and longer video sequences.

-

Large-scale multi-node and hybrid parallel training setups are the default in these scenarios.

-

-

Cross-node Sequence Parallelism:

-

Addresses the challenges of sequences spanning across machines due to large video sizes.

-

Provides significant acceleration, particularly in cases where inter-node communication overhead is a bottleneck.

-

These sequence parallelism optimizations ensure efficient training of large-scale video models, even in demanding configurations with extensive video data and complex hardware setups.

Convolutional Layer Tensor Parallelism Optimization

Colossal-AI introduces targeted optimizations for VAE (Variational Autoencoder) models tailored to high-resolution and long-video data. Specifically, it addresses challenges posed by CUDNN 3D convolutions, which generate extremely large activation values with such data.

Key Optimizations

-

Chunked Convolution:

-

Implements chunking mechanisms for convolution operations, reducing memory overhead and improving computational efficiency.

-

-

Tensor Parallelism for VAE:

-

Unlike traditional tensor parallelism in Transformers, Colossal-AI adopts a new tensor parallelism paradigm designed to handle the massive activation values specific to VAE models.

-

This approach achieves acceleration and memory optimization while maintaining accuracy.

-

Claim GPU Credits

To thank developers for their support and recognition, the following GPU credits are available for contributions based on Colossal-AI or OpenSora:

-

High-Quality Projects

-

Build meaningful and high-quality open-source projects, such as fine-tuning, pre-trained models, applications, or algorithm research papers.

-

Reward: $100 H200 GPU Credit at hpc-ai.com.

-

-

Publishing Open-Source Projects

-

Release related open-source projects.

-

Reward: $10 H200 GPU Credit at hpc-ai.com.

-

Details for claiming credits: Refer to https://colossalai.org/docs/get_started/bonus/

Open-source repository: https://github.com/hpcaitech/Open-Sora

Comments